Category Archives: Data Structures

Hash Table and Magic (Relatively Primes) Numbers

Harder, Better, Faster, Stronger

Today, let’s talk about hash tables. Or, more precisely, one type of secondary probing technique, one that uses some number theory—but not quadratic residues, just relatively prime numbers.

I don’t know if you’re like me, but it often bugs me when I read something in a textbook that seems to make sense, but is claimed without proof nor demonstration (oh, I think I said that before). That seems to happen particularly often when I read stuff about data structures, and this time I decided to explore one of those claims.

View original post 1,093 more words

Cycle Detection in Graphs

In graph theory, the term cycle may refer to a closed path. If repeated vertices are allowed, it is more often called a closed walk. If the path is a simple path, with no repeated vertices or edges other than the starting and ending vertices, it may also be called a simple cycle, circuit, circle, or polygon; see Cycle graph. A cycle in a directed graph is called a directed cycle.

The term cycle may also refer to:

- An element of the binary or integral (or real, complex, etc.) cycle space of a graph. This is the usage closest to that in the rest of mathematics, in particular algebraic topology. Such a cycle may be called a binary cycle, integral cycle, etc.

- An edge set that has even degree at every vertex; also called an even edge set or…

View original post 539 more words

Hashing – a programmer prospective

As a programmer, I would say this is one of the best and most important techniques which I came across so far.

What is hashing?

To understand this, let us look at some of the definitions from the dictionary.

- Hash (noun) – a dish of diced or chopped vegetables, a jumble

- Hash (verb) – to chop into small pieces; make into hash

- Hashing (Computers) – a technique for locating data in a file by applying a transformation, usually arithmetic, to a key

In simple words, it is a technique to transform a bigger data into a small key (word or number) and then using this key to identify that data whenever it is required. Let us take an example to understand this.

A simple example is the phone book. When we want to call a person named Sada, what exactly we do, go to phone book click on “S”…

View original post 1,453 more words

Loop detection in Singly Linked List.

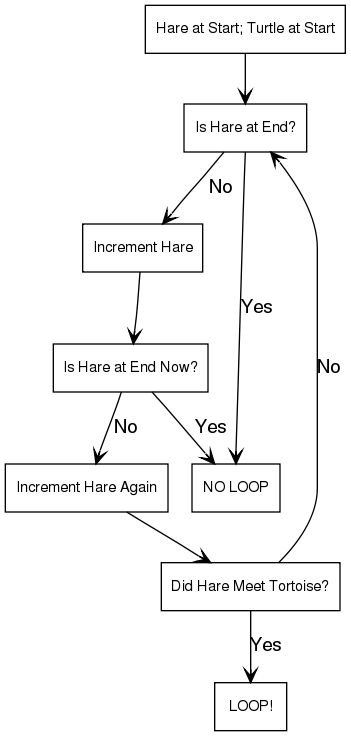

Floyd’s Cycle Detection Algorithm (The Tortoise and the Hare) :

In Brief : How do you determine if your singly-linked list has a cycle?

In the late 1960s, Robert W. Floyd invented an algorithm that worked in linear (O(N)) time. It is also called Floyd’s cycle detection algorithm or The Tortoise and the Hare

The easiest solution to the cycle detection problem is to run through the list, keeping track of which nodes you visit, and on each node check to see if it is the same as any of the previous nodes. It’s pretty obvious that this runs in quadratic (O(N^2)) time… not very efficient, and actually more complicated than this one.

The Tortoise and the Hare is possibly the most famous cycle detection algorithm, and is surprisingly straightforward. The Tortoise and the Hare are both pointers, and both start at the top of the list. For each iteration…

View original post 105 more words

Euclid, Prime numbers, and the Inclusion-Exclusion Principle

Harder, Better, Faster, Stronger

The Euclidean algorithm, the algorithm to compute the greatest common divisor, or $latex \gcd$, of two numbers, dates back to antiquity (obviously). We can use it to make a fast test for primality, at least up to some confidence—and that’s where the inclusion-exclusion principle comes in.

Let us begin by the Euclidean algorithm. Originally, the algorithm was done by successive subtraction (and becomes quickly tedious as numbers grow), but the modern version, at least the one that I know of, uses divisions and remainders (modulo), and computes the $latex \gcd(a,b)$ of two numbers $latex a$ and $latex b$ in $latex O(\lg\min(a,b))$ (counting division as an $latex O(1)$ operation), which is very fast.

View original post 622 more words

Threaded Binary Search Tree in C++

/*********************************************************************************************************

* Threaded Binary Search Tree Implementation *

* Programmer: Graham Nedelka *

* Created in April, 2013 *

* Computer Science 116: Data Structures *

*********************************************************************************************************/

#include <iostream>

#include <iomanip>

using namespace std;

struct bstNode{

// This constructs a ‘node’ container to serve as reference points for a threaded binary search

// tree. Theoretically, each node will point to another container known as a ‘child.’ In this case

// the children are located to the left and to the right of the current node. The nodes to the left

// of the current node contain integers smaller than itself, and larger to the right. What differentiates

// this implementation from binary search tree is the ‘threaded’ aspect, where the nodes within the

// in-order binary tree point to their predecessors, instead of pointing to NULL by default. This allows

// for a shorter processing time in retrieval of data…

View original post 905 more words

Self-Sorting Linked List in C++

/*********************************************************************************************************

* Self Sorting Linked List *

* Programmer: Graham Nedelka *

* Created in May, 2013 *

* Computer Science 116: Data Structures *

*********************************************************************************************************/

#include <iostream>

#include <time.h>

#include <fstream>

#include <iomanip>

#include <vector>

using namespace std;

struct node{

int data;

node *next;

node *previous;

public:

node(int value){data = value; next = NULL; previous = NULL;};

};

class LL{

node *head;

node *tail;

public:

LL() {head = NULL;tail = NULL;};

void add(int number);

void show();

};

void LL::add(int value){

node *current = new node(value);

if (head == NULL){

tail = current;

head = current;

}

else{

node *temp = head;

node *temptail = tail;

if (value < temp->data){

node *temp2 = new node(value);

temp2->next = head;

head = temp2;

return;

}

while(temp->next != NULL){

if (value > temp->data and value < temp->next->data){

current->next = temp->next;

temp->next = current;

temp = temp->next;

}

else if (current->data > temptail->data){

temptail->next…

View original post 137 more words

Representing Large Fixed-Width Integers in C++

Large integers are common in many applications. In cryptography, for example, integers of 1,000 bits and larger are not uncommon. The implementation I presented here is based on a binary representation of numbers using a bitset, at a cost of some performance. What I lost in performance I more than made up for in simplicity. A more efficient implementation of arbitrary precision numbers could easily fill the book.

The BigInt template below uses the bitset from the header to (from my last post) allow you to represent unsigned integers using a fixed number of bits specified as a template parameter.

//big_int.hpp

#ifndef BIG_INT_HPP

#define BIG_INT_HPP

#include <bitset>

#include "bitset_arithmetic.hpp" // from my last post of bitset manipulation.

template<unsigned int N>

class BigInt

{

typedef BigInt self;

public:

BigInt( ) : bits( ) { }

BigInt(const self& x) : bits(x.bits) { }

BigInt(unsigned long x) {

int n = 0;

while (x) {

bits[n++] = x & 0x1;

x >>= 1;

}

}

explicit BigInt(const std::bitset& x) : bits(x) { }

// public functions

bool operator[](int n) const { return bits[n]; }

unsigned long toUlong( ) const { return bits.to_ulong( ); }

// operators

self& operator<<=(unsigned int n) {

bits <<= n;

return *this;

}

self& operator>>=(unsigned int n) {

bits >>= n;

return *this;

}

self operator++(int) {

self i = *this;

operator++( );

return i;

}

self operator--(int) {

self i = *this;

operator--( );

return i;

}

self& operator++( ) {

bool carry = false;

bits[0] = fullAdder(bits[0], 1, carry);

for (int i = 1; i < N; i++) {

bits[i] = fullAdder(bits[i], 0, carry);

}

return *this;

}

self& operator--( ) {

bool borrow = false;

bits[0] = fullSubtractor(bits[0], 1, borrow);

for (int i = 1; i < N; i++) {

bits[i] = fullSubtractor(bits[i], 0, borrow);

}

return *this;

}

self& operator+=(const self& x) {

bitsetAdd(bits, x.bits);

return *this;

}

self& operator-=(const self& x) {

bitsetSubtract(bits, x.bits);

return *this;

}

self& operator*=(const self& x) {

bitsetMultiply(bits, x.bits);

return *this;

}

self& operator/=(const self& x) {

std::bitset tmp;

bitsetDivide(bits, x.bits, bits, tmp);

return *this;

}

self& operator%=(const self& x) {

std::bitset tmp;

bitsetDivide(bits, x.bits, tmp, bits);

return *this;

}

self operator~( ) const { return ~bits; }

self& operator&=(self x) { bits &= x.bits; return *this; }

self& operator|=(self x) { bits |= x.bits; return *this; }

self& operator^=(self x) { bits ^= x.bits; return *this; }

// friend functions

friend self operator<<(self x, unsigned int n) { return x <<= n; }

friend self operator>>(self x, unsigned int n) { return x >>= n; }

friend self operator+(self x, const self& y) { return x += y; }

friend self operator-(self x, const self& y) { return x -= y; }

friend self operator*(self x, const self& y) { return x *= y; }

friend self operator/(self x, const self& y) { return x /= y; }

friend self operator%(self x, const self& y) { return x %= y; }

friend self operator^(self x, const self& y) { return x ^= y; }

friend self operator&(self x, const self& y) { return x &= y; }

friend self operator|(self x, const self& y) { return x |= y; }

// comparison operators

friend bool operator==(const self& x, const self& y) {

return x.bits == y.bits;

}

friend bool operator!=(const self& x, const self& y) {

return x.bits != y.bits;

}

friend bool operator>(const self& x, const self& y) {

return bitsetGt(x.bits, y.bits);

}

friend bool operator<(const self& x, const self& y) {

return bitsetLt(x.bits, y.bits);

}

friend bool operator>=(const self& x, const self& y) {

return bitsetGtEq(x.bits, y.bits);

}

friend bool operator<=(const self& x, const self& y) {

return bitsetLtEq(x.bits, y.bits);

}

private:

std::bitset<N> bits;

};

The BigInt template class could be used to represent factorials, as shown below,

//Using the big_int class

#include "big_int.hpp"

#include <iostream>

#include <vector>

#include <iterator>

#include <algorithm>

using namespace std;

void outputBigInt(BigInt<1024> x) {

vector<int> v;

if (x == 0) {

cout << 0;

return;

}

while (x > 0) {

v.push_back((x % 10).to_ulong( ));

x /= 10;

}

copy(v.rbegin( ), v.rend( ), ostream_iterator(cout, ""));

cout << endl;

}

int main( ) {

BigInt<1024> n(1);

// compute 32 factorial

for (int i=1; i <= 32; ++i) {

n *= i;

}

outputBigInt(n);

}

The program output is:

263130836933693530167218012160000000

Related articles

- Performing Arithmetic on Bitsets in C++ (alikhuram.wordpress.com)

- Binary Search Tree template Class (BST) (alikhuram.wordpress.com)

- Comparisons in C++ (davidcorne.com)

- C++ Primer Plus (6th ed.) CH 3: Dealing with Data (goneim.wordpress.com)

Minimum Spanning Tree Algorithms

Given an undirected, connected graph G=(V,E), one might be concerned with finding a subset ST of edges from E that “span” the graph by ensuring that the graph remains connected. If we further require that the total weights of the edges in ST are minimized, then we are interested in finding a minimum spanning tree (MST). PRIM’S ALGORITHM, shows how to construct an MST from such a graph by using a greedy approach in which each step of the algorithm makes forward progress toward a solution without reversing earlier decisions. PRIM’S ALGORITHM grows a spanning tree T one edge at a time until an MST results (and the resulting spanning tree is provably minimum).

It randomly selects a start vertex s∈V to belong to a growing set S, and it ensures that T forms a tree of edges rooted at s. PRIM’S ALGORITHM is greedy in that it incrementally adds edges to T until an MST is computed. The intuition behind the algorithm is that the edge (u,v) with lowest weight between u∈S and v∈V–S must belong to the MST. When such an edge (u,v) with lowest weight is found, it is added to T and the vertex v is added to S.

The algorithm uses a priority queue to store the vertices v∈V–S with an associated priority equal to the lowest weight of some edge (u,v) where u∈S. This carefully designed approach ensures the efficiency of the resulting implementation.

Solution

The C++ solution below relies on a binary heap to provide the implementation of the priority queue that is central to PRIM’S ALGORITHM. Ordinarily, using a binary heap would be inefficient because of the check in the main loop for whether a particular vertex is a member of the priority queue (an operation not supported by binary heaps). However, the algorithm ensures that vertices

are only removed from the priority queue as it processes, so we need only maintain a status array inQueue[] that is updated whenever a vertex is extracted from the priority queue.

In another implementation optimization, we maintain an external array key[] that records the current priority key for each vertex in the queue, which again eliminates the need to search the priority queue for a given vertex identifier.

/**

* Prim’s Algorithm implementation with binary heap

*

* Given undirected graph, compute MST starting from a randomly

* selected vertex. Encoding of MST is done using 'pred' entries.

*/

void mst_prim (Graph const &graph, vector &pred)

{

// initialize pred[] and key[] arrays. Start with arbitrary

// vertex s=0. Priority Queue PQ contains all v in G.

const int n = graph.numVertices( );

pred.assign(n, -1);

vector<int> key(n, numeric_limits<int>::max( ));

key[0] = 0;

BinaryHeap pq(n);

vector inQueue(n, true);

for (int v = 0; v < n; v++)

{

pq.insert(v, key[v]);

}

while (!pq.isEmpty( ))

{

int u = pq.smallest( );

inQueue[u] = false;

// Process all neighbors of u to find if any edge beats best distance

for (VertexList::const_iterator ci = graph.begin(u); ci != graph.end(u); ++ci)

{

int v = ci->first;

if (inQueue[v])

{

int w = ci->second;

if (w < key[v])

{

pred[v] = u;

key[v] = w;

pq.decreaseKey(v, w);

}

}

}

}

}

Consequences

For dense graphs, the priority queue can be implemented instead with a Fibonacci heap. This improves the performance to O(E+V*log V), a significant speedup over the binary heap implementation.

Analysis

The initialization phase of PRIM’S ALGORITHM inserts each vertex into the priority queue (implemented by a binary heap) for a total cost of O(V log V). The decreaseKey operation in PRIM’S ALGORITHM requires O(log q) performance, where q is the number of elements in the queue, which will always be less than |V|. It can be called at most 2*|E| times since each vertex is removed once from

the priority queue and each undirected edge in the graph is visited exactly twice. Thus the total performance is O((V+2*E)*log n) or O((V+E)*log V).

Related articles

- Single-Source Shortest Path (Dijkstra’s Algorithm Implementation in C++) (alikhuram.wordpress.com)

- Prim’s algorithm for Minimum Spanning Tree in C (jlmedina123.wordpress.com)

- Minimum Spanning Trees (eventuallyalmosteverywhere.wordpress.com)

- [ACM-ICPC] Minimal Spanning Tree (slideshare.net)

- Graph algorithm variants (aandrew99.wordpress.com)

- Dijkstra’s Algorithm (woodscompscitutorials.wordpress.com)

- How many minimal spanning trees are there when all edge costs are distinct? (cs.stackexchange.com)